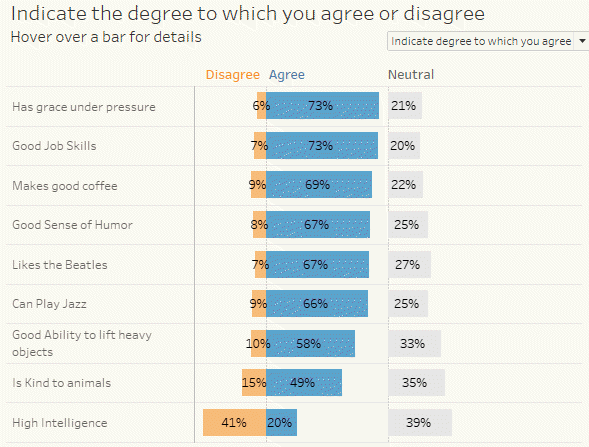

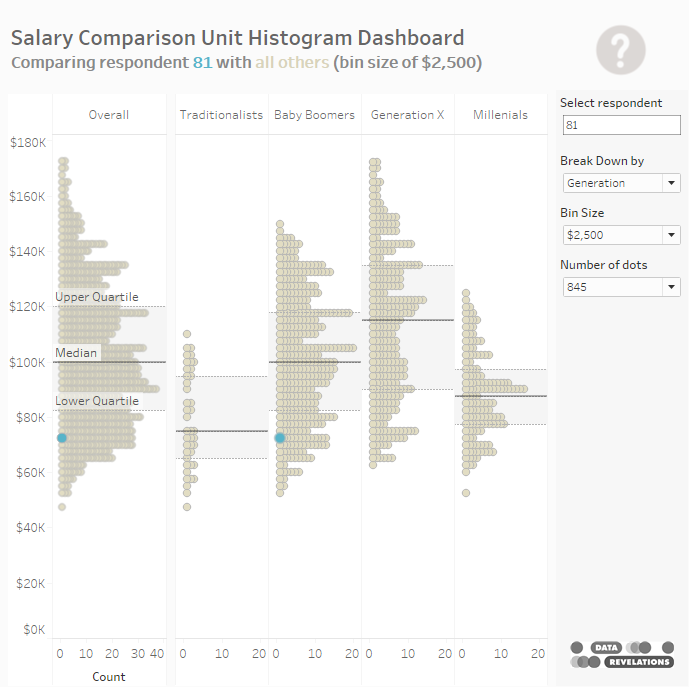

Got Likert data? Put the Neutrals off to one side

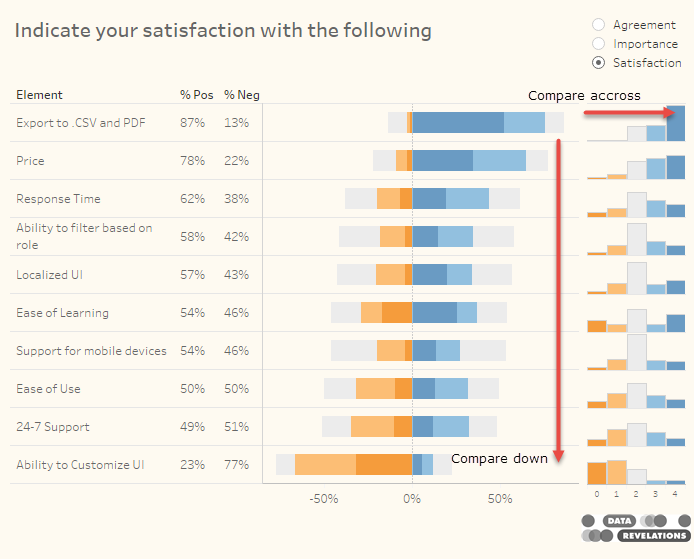

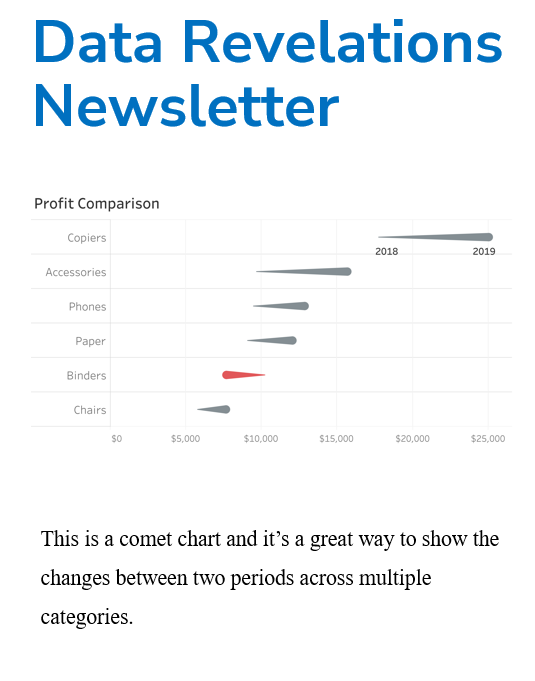

November 3, 2019 Overview Anyone that follows this blog or visits datarevelations.com to read articles on visualizing survey data know that I spend a lot of time thinking about how to present Likert scale data and what to do with neutral responses. I remain a stalwart supporter of some type of divergent stacked bar [...]