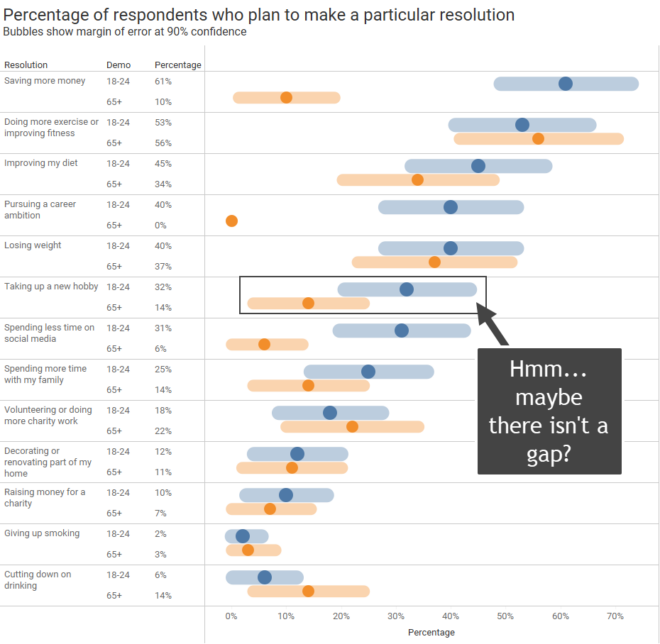

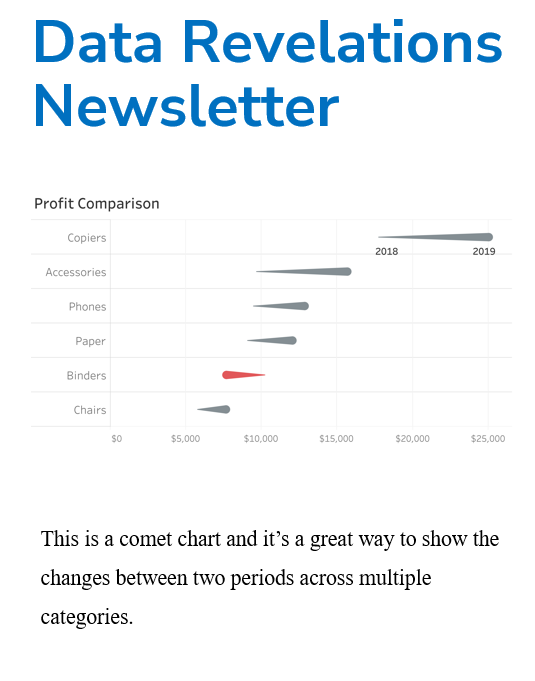

Actuals? Percentages? Why not show BOTH?

I recently saw a post on LinkedIn from Salma Sultana. If you don’t already follow Salma I encourage you to do so. Salma covers a lot of ground in her post, citing an example from Scott Berinato’s book Good Charts where Berinato underscores the importance of sketching and how he and Walter Frick brainstormed on [...]